Facebook introduces its Artificial Intelligence to help detect “suicidal posts” before they are reported.

This is indeed an increase in the usefulness of Facebook. The social media giant, Facebook is going beyond a social media limit, becoming more than a life saver for us.

This is indeed an increase in the usefulness of Facebook. The social media giant, Facebook is going beyond a social media limit, becoming more than a life saver for us.

The C.E.O, Mark Zuckerberg has introduced a new software into the Facebook social world. This proactive detection artificial intelligence will scan all posts for pattern of “suicidal thinking” and then sends mental health resources to either the user at risk or their friends, or rather contact local first-responders when necessary. This Artificial Intelligence (AI) will do more of flagging perturbing posts rather than wait for user reports. This is likely emergent and Facebook can reduce the time taken to render help.

Previously, Facebook experimented using AI to detect worrisome posts as well as surfacing suicide reporting options to friends in the U.S. Now, every content on Facebook from around the world will be scanned thoroughly.

In a post on facebook, Mark Zuckerberg says “In the future, AI will be able to understand more of the subtle nuances of language, and will be able to spot various problems beyond suicide as well, including spotting more kinds of bullying and hate in a lesser time.”

This AI becomes more useful when it detects specifically risky or urgent reports by a user and sends them to moderators for a quick address. With the AI, more moderators are devoted to dealing with suicide cases at all times. For effective operation of the service, it now has about 80 local partners like Save.org, National Suicide Prevention Lifeline and Forefront.

The VP of product management, Guy Rosen, had a discussion with some mental health experts on Suicide prevention method, and one of the best ways to prevent suicide is for people in need to hear from friends or family that care about them. Doing so will put Facebook in a special position, as it can now help people who are in serious danger locate and connect with friends and organizations that are willing to render help.

The AI technology is well programmed to find and study patterns in the words and imagery used frequently in posts that have been manually reported to do with suicide action in previous times. Comments the AI was trained to look for include; “are you OK?” and “Do you need help?”

How Suicide Reporting Works On Facebook

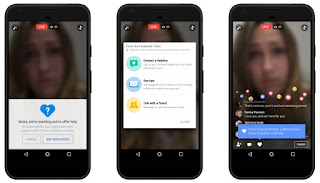

If someone is expressing a sense of suicide in a post of any type (including video), Facebook’s AI will expectedly recognize it and the same time flag it to prevention-trained human moderators, and also will make users easily gain access to reporting options.

When a suicidal report is received, Facebook has a way of highlighting the part of the written post or video that aligns with the suicidal-risk patterns, even part of a video that's receiving more attention and comments from viewers. These user reports are given rapid responses even more than the usual reports pertaining to content-policy violations.

At this stage, Facebook will want to rescue the user at risk. Facebook has a software that brings up local language resources from its partners, other resources include telephone hotlines for suicide prevention and close authorities.

Any Facebook moderator can then send the user at risk’s location to the responders, or rather send them to friends who can console the user.

Reporting one of Guy Rosen’s comments- “One of our goals is to ensure that our team can respond worldwide in any language we support.”

Hmmm.... Facebook is really turning into something else

ReplyDeleteYes, Benedict. Facebook is becoming more useful each day. Thanks for commenting.

Delete